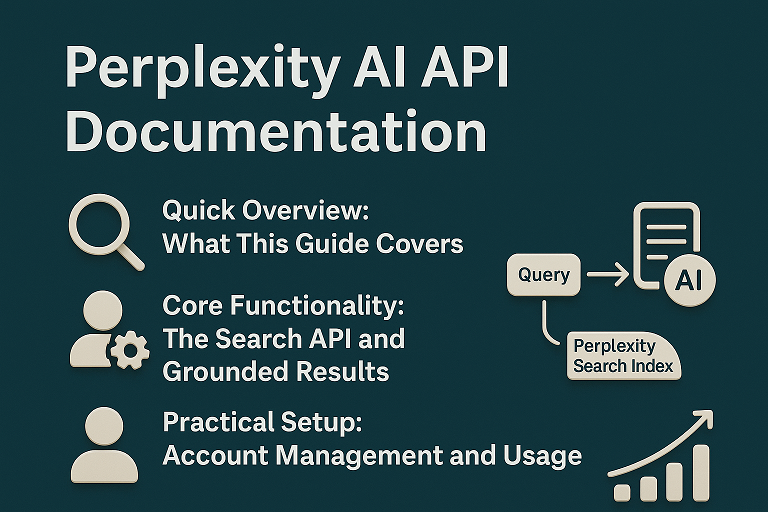

Introduction to Analyze Search Rankings in Perplexity AI

Search rankings don’t mean what they used to. In Perplexity AI, there’s no blue-link ladder to climb, no classic “position one” trophy. Instead, there’s something far more valuable: citation. If Perplexity quotes your content as a source, you’ve won visibility, trust, and influence in one shot. Miss that, and you’re invisible—no matter how good your traditional SEO looks.

Let’s break down how to analyze search rankings in Perplexity AI through the lens of Generative Engine Optimization (GEO) and Answer Engine Optimization (AEO)—where the real competition is about being selected, not ranked.

Understanding the New Rules of AI Search

From Link Rankings to Answer Engines

Traditional search engines behave like librarians. They hand you a list of books and say, “Good luck.” Perplexity AI behaves more like a researcher. It reads everything, synthesizes the answer, and then tells you where that answer came from.

That’s a massive shift.

Why Perplexity AI Thinks Differently Than Google

Perplexity runs on Large Language Models that prioritize context, clarity, and trustworthiness. It doesn’t care who stuffed the most keywords. It cares who explained the topic best, most clearly, and most credibly.

The Core Idea — Perplexity AI Ranking Is a Battle for Citation, Not Position

What “Being Cited” Really Means in AI Search

A citation in Perplexity is like being quoted in a research paper. You’re not just visible—you’re endorsed. The AI is essentially saying, “This source helped me think.”

That’s the new gold standard.

Why Traditional SERP Positions Don’t Matter Here

You can rank #1 on Google and still be ignored by Perplexity. Why? Because AI search isn’t about popularity—it’s about extractability and authority. If your content can’t be easily understood, summarized, and trusted, it won’t be cited.

The Paradigm Shift — Why Perplexity AI Is Fundamentally Different

Perplexity as an Answer Engine, Not a List Engine

Perplexity doesn’t show ten results. It shows one synthesized answer backed by a handful of sources. That scarcity makes citations brutally competitive.

How LLMs Decide Which Sources Deserve to Be Quoted

Context Over Keywords

LLMs read meaning, not matches. They look for content that answers questions, not just pages that repeat phrases.

Extractability Over Length

A 1,500-word article beats a 5,000-word monster if the answers are clearer. Think scissors, not spaghetti.

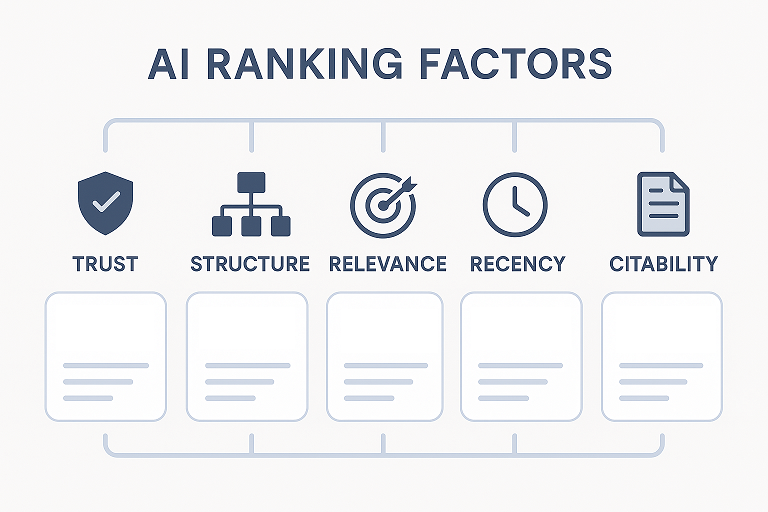

Perplexity AI Ranking Factors — What You Actually Need to Analyze

Trust and Authority Signals

Third-Party Mentions and Citations

Perplexity leans on a curated trust pool. If your domain is mentioned on Reddit threads, industry blogs, review sites, or reputable publications, your odds improve dramatically.

Analysis tip: Search your brand or domain across authoritative platforms and forums. Are people referencing you organically?

Original Research and Data Ownership

AI loves sources that add information, not recycle it. Original stats, first-hand experiments, and proprietary insights are citation magnets.

Content Structure and Clarity

The Answer-First Principle

Strong Perplexity-cited content answers the question within the first 100–150 words of a section. No throat-clearing. No storytelling detours.

AI-Friendly Formatting

Clear H2s, H3s, bullet points, numbered steps, and tables make your content easy for AI to digest. If a human can skim it, an LLM can extract it.

Semantic Relevance and Search Intent

Entity Coverage and Topical Authority

Instead of obsessing over one keyword, analyze whether the content covers all related concepts, entities, and sub-questions. Perplexity favors complete thinkers.

Recency and Citability

Freshness as a Trust Multiplier

Updated content signals relevance. Even evergreen topics benefit from recent edits, examples, or data refreshes.

Quotable Insights and Statistics

If your content includes crisp definitions, frameworks, or statistics, it becomes easier for Perplexity to quote you verbatim.

How to Conduct a Perplexity Ranking Audit (Step-by-Step)

Step 1 — Map Real User Questions

List 20–30 natural-language questions your audience actually asks. Not keywords—questions.

Step 2 — Manual Citation Tracking Inside Perplexity

Run each question in Perplexity. Track:

- Which domains are cited

- How often your site appears

- Which competitors dominate citations

Do this weekly. Patterns emerge fast.

Step 3 — Competitor Citation Gap Analysis

Compare your content against cited competitors. Ask:

- Do they answer faster?

- Is their structure cleaner?

- Do they include original insights?

That gap is your roadmap.

Step 4 — Measuring Share of Voice in AI Answers

Your AI Share of Voice =

Your citations ÷ Total citations across tracked queries

This is the new KPI.

Using Tools to Analyze Perplexity AI Rankings

Perplexity Tracking with SEO Tools

Some modern SEO tools now track Perplexity citations directly. This automates what used to be a manual grind.

Calculating AI Search Share of Voice

Instead of SERP visibility, you measure citation visibility. This tells you how often AI chooses you as a source.

Monitoring Referral Traffic from Perplexity AI

Set up analytics to track traffic from ai.perplexity.ai. Small numbers, yes—but incredibly high intent.

How to Optimize Content After Your Analysis

Writing for Extractability

Every section should answer one question clearly. If a paragraph can’t stand alone, rewrite it.

Designing Pages for AI Citation

Think like an editor. Would this paragraph be easy to quote? If yes, you’re on the right path.

Turning Pages into “Citation Assets”

Your goal isn’t ranking pages—it’s building reference-worthy resources.

Common Mistakes When Analyzing Perplexity AI Rankings

Chasing Keywords Instead of Questions

AI search starts with intent, not syntax.

Ignoring Content Structure

Messy formatting equals invisible content.

Treating AI Search Like Traditional SEO

This isn’t about links and anchors anymore. It’s about clarity, trust, and usefulness.

The Future of Search Visibility in an AI-First World

Why GEO and AEO Will Replace Pure SEO Metrics

Clicks are fading. Citations are rising. Visibility now means being part of the answer.

How Brands Win by Becoming Trusted Sources

The winners won’t be louder—they’ll be clearer, smarter, and more helpful.

Conclusion

Analyzing search rankings in Perplexity AI isn’t about chasing positions—it’s about earning trust. When you shift your mindset from “Where do I rank?” to “Why would an AI cite me?”, everything changes. Structure better. Answer faster. Publish with authority. In the world of AI search, being quoted beats being listed every single time.

FAQs

1. Does ranking #1 on Google guarantee citations in Perplexity AI?

No. Google rankings and Perplexity citations use completely different evaluation logic.

2. How often should I audit my Perplexity AI visibility?

Weekly for priority queries is ideal, especially in competitive niches.

3. What type of content gets cited most by Perplexity?

Clear, structured content with direct answers, original insights, and strong authority signals.

4. Can small websites compete with big brands in Perplexity AI?

Yes. Clarity and expertise often beat brand size in AI citation battles.

5. Is Perplexity AI optimization different from ChatGPT optimization?

The principles overlap, but Perplexity places far more emphasis on source attribution and citability.